Caching with Redis

Presented by @tlhunter@mastodon.social

Distributed Systems with Node.js: bit.ly/34SHToF

I: The Basics

What is caching?

- Caches make your application faster

- Cache problems must not break app

- Getting outdated data from cache could be bad

There are only two hard things in Computer Science: cache invalidation and naming things.— Phil Karlton

Pseudocode: Read

- This forms the basis of all caching

MY_RECORD = CACHE.GET("RECORD_100")

IF NOT MY_RECORD

MY_RECORD = DATABASE.GET("RECORD_100")

CACHE.SET("RECORD_100", MY_RECORD)

RETURN MY_RECORDPseudocode: Modify

- Easy if app writing to DB also writes to Cache

DATABASE.SET("RECORD_100", NEW_RECORD)

// These two are almost the same

CACHE.SET("RECORD_100", NEW_RECORD)

CACHE.DELETE("RECORD_100")Nomenclature

- Naming considerations to prevent collision

- Must contain the minimum to identify data

- Take keyname byte length into consideration

appversion-collection-cversion-etc:id v5-animaltypes-v2-en_US:123

II: Passive Cache Invalidation

Expiration / TTL

- Set a Time To Live (TTL) on a per-key basis

- Each command has a millisecond equivalent

SETEX keyname 120 "my text" SET keyname "my text" EX 120 SET keyname "my text" EXPIRE keyname 120 EXPIREAT keyname epoch TTL keyname TOUCH keyname

Key Eviction

- Delete (evict) keys when low on memory

- Set the maximum memory Redis is allowed to use

- These changes are global for the Redis instance

- You may want multiple Redis instances per app

maxmemory 512mb maxmemory-policy <policy-name>

Key Eviction Policies

noeviction: Error when adding data (default)allkeys-lru*: Evict any key based on last usageallkeys-random*: Evict any key randomlyvolatile-lru: Evict TTL key based on last usagevolatile-random: Evict TTL key randomlyvolatile-ttl: Evict a key with shortest TTL

* Entire Redis instance is now volatile

LFU: Least Frequently Used (Redis 4.0)

- Evict keys which aren't used that frequently

allkeys-lfu*: Evict any infrequently used keyvolatile-lfu: Evict TTL infrequently used key

* Entire Redis instance is now volatile

Pattern: Expire on Read

- Store object as hash with time metadata

- Track expiry time but don't use key TTL

- Quickly reply to a request with old data

- Immediately update cache with new data

- E.g. first read of the day has last nights data

Pattern: Expire on Read

META = CACHE.GET("R100")

REPLY(META.DATA) // Potentially old Data

IF META.EXPIRE < NOW

NEW = DATABASE.GET("R100")

LATER = NOW.ADD("2 DAYS")

CACHE.SET("R100", DATA: NEW, EXPIRE: LATER)III: Active Cache Invalidation

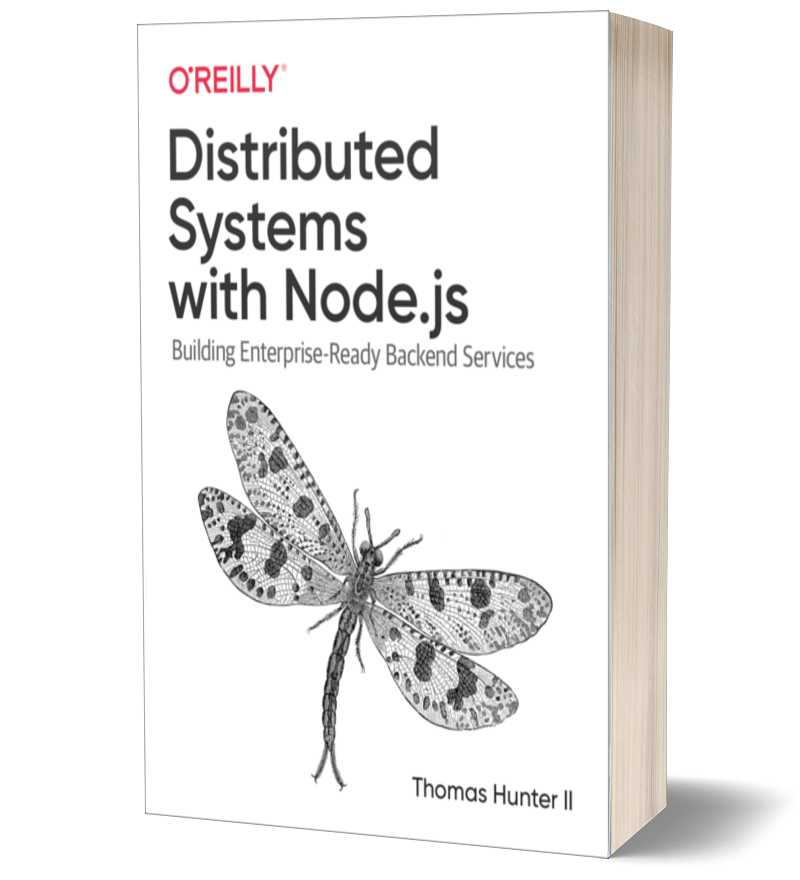

Distributed Systems Complexity

- The simple cache set/delete no longer works

- How does Consumer know when to update cache?

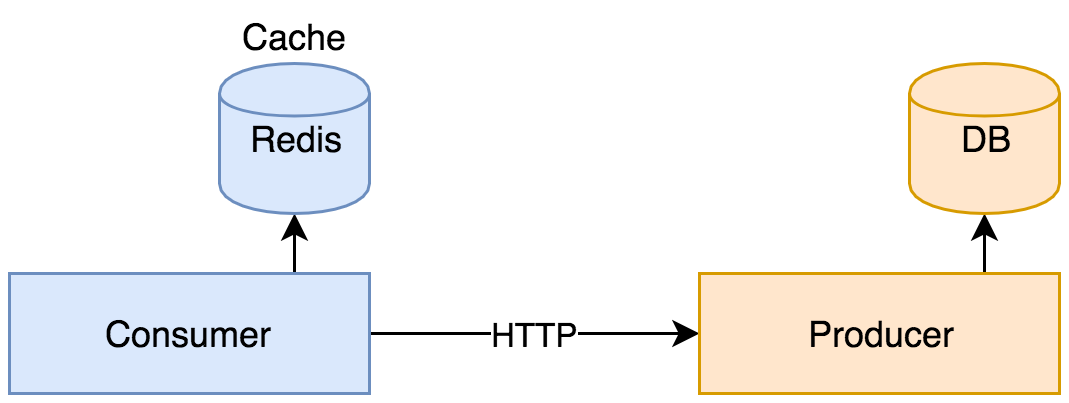

Distributed Systems Naive Approach

- Consumers connect to a Producer-owned cache

- Doubles the size of the API surface

- Doubles the documentation, locations for error, etc.

- Why not just have Producer be really fast?

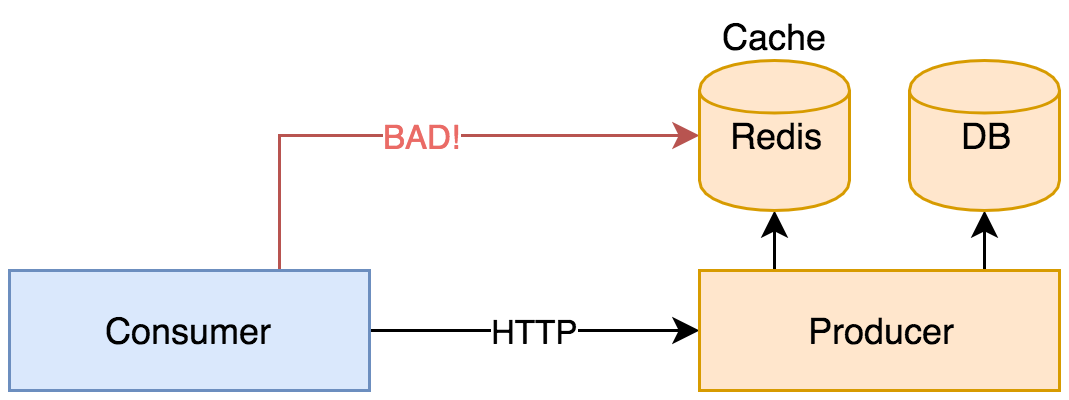

Pub/Sub to the Rescue

- Here is a method we can use to invalidate caches

- Producer publishes a message describing resource

- Consumer is in charge of updating/removing data

Pub/Sub to the Rescue

- Subscribe to channels representing collections

- Many producers could use the same Redis server

- Publish enough information to identify the resource

SUBSCRIBE service-name:collection:version

PUBLISH service-name:collection:version

'{"lang":"en_US","id":123}'

PUBLISH service-name:collection:version

'{"lang":"en_US","id":123,"name":"xyz"}'

Questions?

- Follow me: @tlhunter@mastodon.social

- Distributed Systems with Node.js: bit.ly/34SHToF