Node.js Modules, Packages, and SemVer

The terminology used in the Node.js ecosystem for referring to the different levels of code encapsulation can get confusing. Admittedly, I was guilty of saying "package" when I really meant "module" for the first several years of my Node.js development career. This article, which is based on content from my recently-released book Distributed Systems with Node.js, aims to clarify this terminology.

Once you're done reading this you'll understand the relationship between Node.js modules and npm packages, how packages are laid out on disk once they're installed, and how npm leverages SemVer to decide which versions of packages will meet the needs of the application, and where on disk the packages should be installed.

Node.js Modules

Simply put, a Node.js module is a JavaScript file that can be required. The Node.js runtime currently supports two different types of modules. The first is the CommonJS module, and is the one that Node.js has supported the longest. Files in this format can end in *.js or *.cjs. The second is the ECMAScript module, a relatively new addition to Node.js. The file extension for this format is either *.js or *.mjs.

The CommonJS module is a bit different than a JavaScript file that would ordinarily be loaded in a web browser. Specifically, CommonJS JavaScript files have a require() function that is available to it, for referencing other CommonJS files, while also exposing an exports object, for exporting functionality to other modules. Tools like Webpack and Browserify do make it possible to use CommonJS files in the browser.

Internally, when a CommonJS module is required by Node.js, it wraps the contents of the file in an Immediately Invoked Function Execution (IIFE). This is how the exports object and require function and some other Node.js features are made available. This wrapper looks like the following:

(function(exports, require, module, __filename, __dirname) {

// original content here

});

While it's possible in a JavaScript file loaded in the browser to set globals quite easily, such as declaring foo = 'bar', in these CommonJS files it's necessary to explicitly assign such globals to the global object. In Node.js, you can assign properties to global or globalThis (in browsers you can explicitly assign properties to window, self, or globalThis).

The require(mod) function has to jump through a few hoops before it's able to locate the proper module to be required. This process is known as the module resolution algorithm. Here's a basic version of what that process looks like:

- If mod is a built-in Node.js module like

httpthen load it - If mod starts with

/,./, or../then load the path to the file or directory - If a directory, look for a package.json file with a

mainfield and load that - If a directory doesn't contain a package.json then look for index.js

- If a file then load the exact filename, otherwise try with file extensions .js, .json, and .node

- Look for a mod directory inside

./node_modules/ - Ascend each parent directory looking for a

node_modules/directory for a match

That list might be a lot to process, so here's a list of example mod values and what the resolved path to the module looks like. These examples assume the require(mod) call is made from a file located at /srv/server.js:

| Require Statement | Module Path |

|---|---|

require('path') |

Built-in path module |

require('./my-mod.js') |

/srv/my-mod.js |

require('redis') |

/srv/node_modules/redis/, /node_modules/redis/ |

require('foo.js') |

/srv/node_modules/foo.js/, /node_modules/foo.js/ |

require('./foo') |

/srv/foo.js, /srv/foo.json, /srv/foo.node, /srv/foo/index.js |

As a tip, you should always be explicit and provide the file extension when requiring files. If you omit the extension, the intent of the require statement is now ambiguous, and if someone were to one day add a foo.js file when you're used to requiring foo.json, your code may break in a hard-to-diagnose manner.

Once a file does get loaded it gets added to the require cache. This is a key/value cache where the key is the absolute path to the resolved module filename, and the value is the result of that module's exports object. This is why you're able to require the same file that exports a singleton instance multiple times and each result points to the same singleton instance.

SemVer: Semantic Versioning

SemVer is a philosophy for releasing packages. It's used by many different platforms, including npm. SemVer version strings are made up of three numeric components separated by periods, such as 1.2.3. The first number is the major version, the second is the minor version, and the third is the patch version.

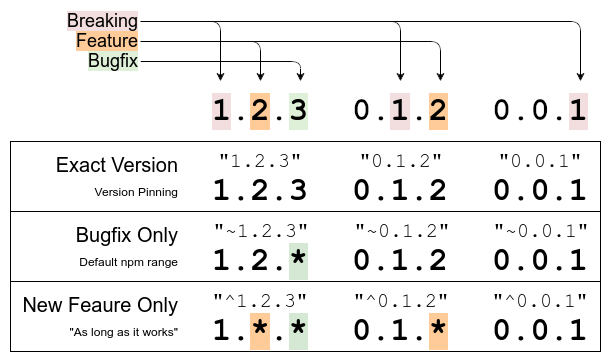

Each of these components has different meanings. Usually, the major number for a package should increment if a breaking change is made to the package. Next, the minor number should increment if a new feature is added. Finally, the patch number should increment if a bug fix is released. Incrementing a number means the numbers to the right of it can reset back to zero. These number can be larger than 9, unlike normal decimal numbers.

When leading zero numbers are involved, such as 0.1.2 or 0.0.1, then the most-significant digit (i.e. the number 1) takes on the role of the breaking changes, and the second most-significant number, if applicable, takes on the new features. This is just a special way of saying that package versions with leading zeros aren't necessarily the most stable of projects. Here's a diagram to help explain these version components:

Adherence to the SemVer philosophy is what holds the npm community together. Thanks to these assumptions about compatibility, applications are free to depend on "ranges" of packages, instead of exact specific package versions. Applications specify this range by adding entries to the dependencies field in their package.json files. This list is comprised of key/value pairs where the key is the name of the package and the value is some representation of the package version range. While there are several ways to denote these ranges, the following is a list of the most common ones:

"dependencies": {

"fastify": "^3.11.1",

"ioredis": "~4.22.0",

"pg": "8.5.1"

}

The fastify package uses a caret (^ symbol) to denote its version range. This is a way of saying that any compatible version of at least the specified version is acceptable. In this case it'll match 3.11.1, 3.11.9, 3.19.3, etc. However it will not match 3.11.0, 3.10.1, 4.0.1, etc. This is the default range used when installing a new package with npm.

The next package, ioredis, uses a tilde (~ symbol) for its version range. This is a way of saying that only bugfixes can be picked up, not even new features can be picked up. This can be useful in situations where you have very high coupling with the package, perhaps with a web framework that you extend very heavily. In this case 4.22.1 could get picked up, but not 4.23.0.

The final package, pg, uses no symbol, and will only ever pick up this exact package version. Sometimes this is referred to as "pinning" a package version.

Now, I will say, the importance of specifying these ranges isn't quite as important as it was several years ago. With the advent of the package-lock.json, which is covered in more detail later, package versions are no longer changing every time we run npm install. But, at the same time, it is the only source of "truth" for the package expectations of your application.

npm Packages and the node_modules/ directory

npm packages are archives that contain Node.js modules and other supporting files (JSON files, README.md, etc). Public packages are uploaded to the npmjs.com registry, while private ones may either be uploaded to the registry or to a private registry owned by the company. Node.js itself isn't actually aware of what an npm package is, it's only aware of directories and files located in the node_modules/ directory. It's up to the npm CLI to extract those packages and put their contents in the right location.

npm packages are very important to Node.js applications as Node.js itself lacks a lot of functionality that other platforms provide. This is an intentional design philosophy which has encouraged the npm package ecosystem to thrive. The relationship is symbiotic as well, with the sheer number of npm packages helping ease corporate adoption of the Node.js platform.

Nearly all Node.js applications will have dependencies. A dependency is an npm package that the application depends on. A dependency can either be a direct dependency, one that is listen in the package.json file, or a sub-dependency/transient dependency, which is a dependency of a dependency. This leads to a hierarchy of dependencies. Recall that the require() function can look in a node_modules/ directory relative to the file that is calling it, and it can also look in parent directories. A very naive version of a package installer could simply install packages on disk, reflecting the hierarchy of what each dependency needs, but better approaches exist.

Here's a demonstration of this. Pretend that an application has a package.json file that depends on foo@1.0.0 and bar@2.0.0. The foo package doesn't have dependencies, but bar does depend on foo@1.0.0. Here's what the naive approach could look like, where every single dependency has a node_modules/ directory of its dependencies:

node_modules/

foo/ (1.0.0)

bar/ (2.0.0)

node_modules/

foo/ (1.0.0)

One problem with this is with cyclical dependencies. If the foo package also depended on bar, then you would need an infinitely nested folder structure. Another problem with this approach is that the foo module is copied twice on disk, wasting space. Instead, when npm installs packages, it will de-duplicate them by hoisting a package higher in the tree. In this particular example the deeply nested foo package can be hoisted all the way to the top, where another foo package already resides:

node_modules/

foo/ (1.0.0)

bar/ (2.0.0)

The bar package won't find the foo package in it's node_modules/ directory, as it doesn't exist, and will instead ascend, finding the one adjacent to it.

This example is fairly simple, but there are many more complicated examples. For example, different packages can depend on packages of different versions or of ranges. It's then up to npm to find the best version of each package to satisfy the needs of other packages, and where to hoist them all to reduce disk space.

Also, only a single version of a package can exist in a given node_modules/ directory at a time. If the bar@2.0.0 package were to depend on foo@2.0.0, then it wouldn't be hoist-able. Instead, npm would need to have the two versions both exist on disk:

node_modules/

foo/ (1.0.0)

bar/ (2.0.0)

node_modules/

foo/ (2.0.0)

The package-lock.json file, and its forgotten predecessor npm-shrinkwrap.json, were made to lock down not only the direct dependencies of a project but its transient dependencies as well. Without such a file, the layout of packages on disk, and their versions, will change over time as new package versions are published.

This content is a condensed version of just one of the sections in Distributed Systems with Node.js. If you're looking to increase your Node.js development prowess, especially when it comes to building backend systems, I highly recommend checking it out.